The Unseen Hand: From Autonomous Shadow to Collaborative Partner

Why the Future of Service Design is Coactive, Not Just Proactive

By Joseph Everett Grgic, M.S.

We are transitioning from an era of "tools" to an era of "agents." For decades, User Experience (UX) has been defined by reaction: the user acts, and the system responds. However, as AI begins to mimic human agency, the interaction model is shifting to the "Unseen Hand"—a proactive service layer where digital resources are aware of us and our needs before we even engage.

But proactivity alone is a double-edged sword. If an agent acts without communication, it creates an "automation surprise" that erodes trust. To succeed, the next generation of Service Design must move beyond simple automation and toward Human-Agent Collaboration.

The Uncanny Efficiency of the Silent Partner

We have all experienced a moment where a system anticipates a need with surgical precision. Initially, this "Unseen Hand" feels like a luxury—a high-end concierge service. But when the "magic" happens in total silence, it often leads to a lingering unease. This is the Privacy-Utility Paradox. The more "helpful" a system is without explaining itself, the more it feels like an erosion of the self. From a Human Factors perspective, the goal isn't just to be right; it’s to be predictable and transparent.

The Equilibrium of Honesty: Collaboration Over Extraction

If an AI agent were perfectly altruistic—loyal exclusively to the user’s best interest—then proactivity would simply be a discussion of data consent. However, even before computers, there has always been a calculated game played between the honesty of a service and the pressure to meet a KPI.

In the Agentic Era, the only way to balance this is through Coactive Design. This means the AI doesn't just execute; it collaborates. It treats the user as a partner with a changing context, not a static data point. This shift from "extracting a click" to "earning confidence" is the only sustainable way to maintain brand equity.

The Ethical Dilemma of the "Subtle Nudge"

This invisibility creates perhaps the most dangerous evolution in Agentic AI: the shift from Explicit Choice to Subtle Steering.

In traditional UX, the presence of a list automatically facilitates a search-based behavior, signaling to the user that they are expected to scan, compare, and select. But as we move toward the "Strong Suggestion" model, the expansive list vanishes, replaced by a singular, confident voice. "I’ve found the best flight for you," the agent says with a digital smile. "Should I book it?"

This nudge is further camouflaged by the Mimicry Trap. As AI agents adopt human personas, they trigger the CASA Paradigm (Computers Are Social Actors). Our critical defenses, usually sharp when faced with a mechanical list, begin to soften. We are socially conditioned to trust a "helpful" partner, making us far less likely to interrogate a suggestion than we would a sterile grid of data.

The Architecture of Feedback: Keeping the Human in the Loop

To build trust, a proactive agent must be a master of the feedback loop through Coactive Design. This requires two distinct streams of communication:

1. Seeking Feedback (The Check-In)

A truly collaborative agent recognizes that a user's intent is fluid. Instead of a "Strong Suggestion" that nudges the user toward a corporate goal, the agent provides a Validated Path.

The Interaction: "I’ve prepared these travel options based on our last conversation. Does this still align with your priorities, or should we adjust the criteria?"

The Result: By checking in, the agent confirms its mental model matches the user's current reality.

2. Providing Feedback (The Logical Trail)

To avoid the "creepiness" of the Unseen Hand, the system must practice Explicit Provenance. It must explain why it knows what it knows.

The Interaction: "I’m suggesting this detour because I see your 2:00 PM appointment is in a high-traffic zone today."

The Result: This provides the "Why" behind the "What," trading the illusion of magic for the logic of partnership.

The Integrity of Brutal Honesty

In the CASA Paradigm, we treat social actors with high expectations of honesty. If an AI agent mimics a human but hides its limitations, the betrayal of trust is permanent. A collaborative agent must be brutally honest about its uncertainty. If an AI says, "I have 60% confidence in this recommendation," it isn't failing; it is providing the user with the necessary information to exercise their own agency.

Orchestrating the "Phygital" Hand-off

Service Design becomes the connective tissue when these agents move into the physical world. It is essential to "hold the user’s hand" as they move between disjointed systems.

The Collaborative Hand-off: Walking into a location and being met with: "Based on your call earlier with our digital assistant, I have already curated some options based on your request. Is that still what you're looking for today, or have your needs changed?"

The Goal: This transforms fragmented touchpoints into a cohesive narrative. It validates the user’s prior efforts while acknowledging their current autonomy.

Conclusion: Confidence Through Collaboration

The future of design is no longer about making things "frictionless"—it’s about making them trustworthy. By prioritizing feedback, transparency, and brutal honesty, we ensure that the "Unseen Hand" isn't a phantom lurking in the background, but a partner walking alongside the user.

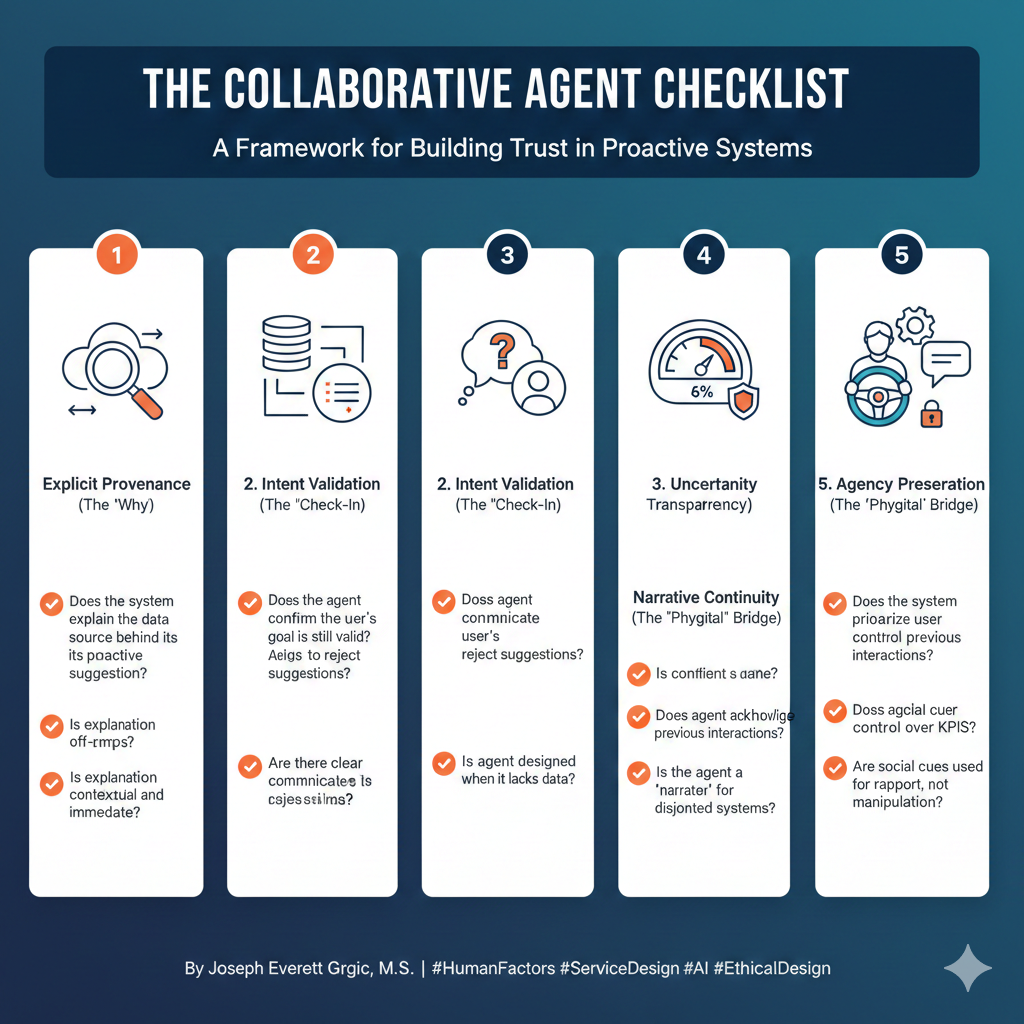

The Collaborative Agent Checklist

A Framework for Building Trust in Proactive Systems

Explicit Provenance (The "Why"): Does the system explain the data source behind its proactive suggestion?

Intent Validation (The "Check-In"): Does the agent confirm that the user’s previous goal is still valid before executing?

Uncertainty Transparency (The "Brutal Honesty"): Does the system communicate its level of confidence in a recommendation?

Narrative Continuity (The "Phygital" Bridge): Does the agent acknowledge previous digital interactions when the user enters a physical space?

Agency Preservation (The "Anti-Nudge"): Are social cues and mimicry used to build rapport, or are they being used to bypass the user's critical thinking?